How the Wheatstone Concertina Ledgers were Digitized for Publication on the Web and CDIntroductionMargaret Birley, the Keeper of Musical Instruments at the Horniman Museum, has written an introduction to the project to digitize the ledgers of the C. Wheatstone & Co. concertina factory at the Horniman Museum, London. She lists there the goals for the project: (1) to increase public access to these manuscripts in the Museum’s collection, (2) to promote worldwide scholarly research about them, and (3) to better preserve the manuscripts by making it unnecessary to handle them. This document describes exactly how those purposes were carried out, how the 2,300 manuscript pages were digitized and made freely available for research on the web and on low-cost CDs. Sufficient detail is included to enable anyone to undertake a similar project. 1. The ProjectThe concertina had become the high-tech musical sensation of England by the 1850s. Invented and patented by Professor Charles Wheatstone in London, the instrument combined novel acoustic principles with innovative mechanical design to produce expressive musical sound in a notably light and compact package. As the craze grew, high-volume production was engineered for Wheatstone by Louis Lachenal, a Swiss immigrant to London. Over two decades, what began as a research prototype turned into an industrial product. The heart of this activity was C. Wheatstone & Co., the company run by Professor Wheatstone and his brother. In its great period, Wheatstone sold concertinas to all the rich and famous people of mid-Victorian London. It was joined by competitors, mostly Wheatstone employees who left to set up for themselves, including eventually Lachenal himself. The Wheatstone company is still in business today, still making concertinas, though now only a trickle. Many business records from Wheatstone & Co. still exist, from the 1840s on. They record the first beginnings, the explosion of interest in the 1850s, the cycles of popularity up to about the second world war, and then a decline of interest after 1950. Everything we have was recorded in ledgers—the earlier ones written with a split pen with some pencil entries, the latest ones written mostly with a biro (ballpoint). All the known records have come to the Horniman Museum in London, as part of their world-famous collection of musical instruments which includes over 600 concertinas and much related documentation. Several years ago, the Horniman Museum realized that there was broad interest in the Wheatstone Concertina Ledgers, which could be best served by photographing the ledgers and posting them on the internet. I volunteered to undertake this project personally—mostly because I wanted to study the ledgers myself and make them available for others to study, but also to work out how a typical manuscript archive could be prepared for the web in the simplest way without requiring fund-raising or consultants. There were two phases to the project: (1) five twentieth-century ledgers, 1910-1974, about 1,100 pages, roughly 8 inches x 10 inches (20 cm x 25 cm); (2) twelve nineteenth-century ledgers, 1834-1891, about 1,200 pages, mostly about 4 1/2 inches x 7 inches (11 cm x 18 cm). I started the planning in 2001, then started work on the newer ledgers in 2002, and they were completed and released on the web in the Spring of 2003. The older ledgers followed, using the same procedures, and have now been completed for release in the Spring of 2005. I could only work at the task an average of one evening a week, apart from a couple of solid weeks spent at the Horniman Museum to scan the ledgers. In total the job took me about 1,000 hours—it could have been done by one person working full time in six months, or by a couple of people in three months. The completed website contains about 4,500 pictures and about 4,500 web pages, woven together with over 100,000 linking references, a total of 700 megabytes of information. The website can be used from any personal computer over the internet, with no need to download or install anything. The whole site is also available on a single CD, which is sold inexpensively online and at the Horniman Museum shop. From the CD, the website can be copied to any personal computer or local server, or to a USB flash drive you can carry in your pocket. I spent the minimum necessary and used the simplest tools. I used my own modest laptop computer to do the whole job (a 2002-vintage IBM ThinkPad with a 866MHz processor). My total expenses were about $50 for software and supplies, plus a new consumer-grade scanner for the Museum ($150). An interesting feature of the project was that I used familiar office software to do the whole job. The webpages were generated by entering all their data into Microsoft Excel spreadsheets, and then using Microsoft Word's MailMerge feature to insert the data into templates to create the individual webpages (the coding for the templates was written by hand, not generated by Word). Enhancement of the scanned images was done using an old version of a modestly-priced graphics program. The result, though, compares favorably to similar projects undertaken by high-priced consultants using much more elaborate equipment and financed by national libraries. Nothing in this project was compromised by my using this "simple" approach. The completed site is now online at http://www.horniman.info. The graphical navigation is based directly on the ledgers themselves, with small pictures of all the books leading to indexes of all the individual pages. The photographs of the ledgers are more legible than the original books, and can be enlarged to twice actual size to examine detail. Any pages or parts of pages can be put side by side for comparison, pages can be bookmarked or printed for reference, and users anywhere in the world can view the pages at the same time and discuss them by telephone—that is, all the advantages of browsing a website apply. Now that the ledgers are digitized, the originals can be put away and only need to be consulted on very rare occasions, a big help to their preservation. The Horniman can use a standard personal computer with a CD copy of the website to offer access to library visitors. Best of all, people all over the world can consult the ledgers directly, without having to come to London. The first batch of ledgers was made available on the website two years ago. Over those two years, nearly 12,000 unique visitors from 88 countries all around the world have viewed the ledgers in over 90,000 separate sessions. Many of the visitors are casual visitors who are looking to find out about their own concertinas and only need to look at the ledgers once, but there is also a core of serious researchers: nearly 1,600 people have visited repeatedly, an average of 49 sessions each. Visits to the site in the second year approximately doubled over the first year, and with the release now of the older ledgers (which contain more information and enable more kinds of research) we expect a further increase in visits. The total cost of the website is a flat $165 per year, for top-tier fast commercial hosting on a BSD Unix server with professional management and backups (this includes all personnel and facilities costs—everything). The cost is not dependent on traffic, within any conceivable limit, so the cost per visitor will continue to decrease. Reductions in other costs to the Horniman Museum, particularly in avoiding the costs of having curators answer email and postal inquiries about the information in the Ledgers, produce a net savings while achieving much broader access. 2. PlanningI prepared a 25-page planning document, which required about a month’s work (actually done over a longer period). Every separate step needed careful planning, such as: - who the users would be,

- what the user interface for access on screen would look like exactly,

- what computers and browsers would be supported,

- which manuscript ledgers would be scanned,

- how their condition dictated what handling was advisable,

- how many individual pages would be scanned and what sizes,

- how many files would result,

- how the files would be named and organized,

- how much storage they would require during intermediate steps,

- and how much storage they would occupy in finished form.

All the planning could be done using information freely available on the web. As with most projects, all the planning has to be done first because after work begins changes and improvements to the design result in lost effort. It was during this planning period that we realized our need for both web access and for CD distribution. First, some of our users still have only dial-up access to the internet, which is adequate for the casual question but intolerably slow for serious research. If we could rely on high-speed broadband internet access for all our users, then web access alone might be enough; but since we cannot, a requirement for CD distribution arises. A second advantage of the CD format is that the whole site can easily be copied from a CD to the hard disk of a personal computer, for purposes such as carrying the information into other research libraries on an ultra-light laptop, or for making a local copy available on a local area network for shared use. We realized that this was also important for the Museum’s own internal use: with the information on CD, the Horniman’s own Library can use any personal computer as a reference station, without having to provide broadband internet or local network connections. Producing CDs has one other small benefit: the latest CD is a high-integrity copy of what the website should be, with no uncertainty. If any disaster should hit the existing web server, it could be reloaded very simply from the latest CD, or a new server computer could be initialized in a new location with equal ease. Either operation could be carried out immediately, without help from specialized technicians and without extra expense. The requirement for CDs influenced the detailed design of the project, but did not increase the amount of work required. A very important decision was that our aim would be to present to those viewing the website or CD exactly what they would see if they came to the Horniman's Library and sat at a reader’s desk: neither more nor less. To avoid delivering less, we digitized and included on the site all the covers of the ledgers, the blank pages, inserted scraps of paper, and in the case of one set of production records written in the back of a commercial “diary” we included 125 printed pages of standard reference information such as postal rates to distant parts of the empire and a listing of members of parliament. To include every bit of every ledger was not a lot of extra work, but it had to be planned. It required more discipline to avoid delivering more, to strictly provide only images of the pages as they are. By avoiding all interpretation we cut out many possible sources of editorial delay. Also, so little is known about these ledgers that any added interpretation would inevitably contain mistakes. For instance, the production ledgers are full of abbreviations for materials and parts and processes that we know little about. Some early readers of the ledgers confidently identified the initials of a craftsman "W.S." who was recorded as having worked on quite a number of concertinas over the years, but further study has suggested that the proper interpretation of "W.S." is likely to be "Wrist Straps". In one ledger, there is a stub where a leaf has been torn out and at that same point there is a brown envelope inserted containing in hurried writing what appears to be a transcription of most of the missing leaf. We think that we know who ripped out the leaf, and to whom the leaf was given (and why), but here we reproduce only the mute evidence of the missing page and the brown envelope. Some (actually very many) of the names recorded in the sales ledgers as early purchasers of concertinas are those of eminent Victorians, the titled and the wealthy. This will make a great story, but we don't comment on them. All the interpretation can be handled in separate publications which will become available on the web, beyond the scope of this project. 3. Computer Equipment UsedI used my own IBM ThinkPad laptop computer (bought in 2002 before the work on this project got underway). This computer was limited in some ways, having only an 866MHz processor and a 12-inch screen, but it had enough memory (640MB), enough disk storage (30GB), and was running Windows XP. This computer was used for the scanning of the documents, and for all steps in the preparation of the website and CD master. Three years on, it is unnecessary to start a project like this by finding funds to buy a new computer. A computer superior in every way could be bought today for less than $1000, but almost any personal computer bought in the last few years is likely to be more than adequate. Before choosing a scanner, the staff in the Museum’s conservation department were consulted, as at all critical stages of the project, and they considered that the ledgers were robust enough to be scanned—with care—on a simple flatbed scanner. I did buy a new scanner (for $150), because we were worried that the ledgers might contain so much workshop dust that they could contaminate the Museum’s scanner. I figured that if my scanner got dirty I could throw it away, but that didn't happen. Apart from a consideration like dust, there is no need to buy a new flatbed scanner; there have been only minor advances in the last few years, and almost any existing scanner is good enough. Scanners sold through consumer channels are entirely adequate for any research digitizing project. I already had an ordinary color inkjet printer (cost about $200), which was used for testing the printing from the website. No other hardware or equipment was needed for the project. I did test the final result on a wide range of operating systems and browsers before release, but I just borrowed access to a variety of machines owned by friends (and to find a Macintosh, rented an hour of time at an Internet cafe). There is no need to build a testing laboratory nor to hire consultants. For software, I used almost entirely what I already had. (Any tool that one already knows how to use correctly is likely to be superior to any new tool that one has to learn.) For scanning, I used the manufacturer's software that came free with the new scanner. For graphics, I used Fireworks 4 from Macromedia, which was already used for other web-graphics purposes, but any similar graphics program would have worked just as well (Adobe Photoshop is the best known, although more complex and more expensive). All the rest of the work to make the website was done with components of Microsoft Office which came on the computer—Excel, Word, and FrontPage. The only new software purchased for this project was a convenient bulk file-renaming utility, bought for $20 over the web. 4. ScanningTo scan I just carefully put a volume open to each page on the glass of the flatbed scanner and pressed the button. In order not to damage the edges of the ledger pages, they were not compressed up against any edge of the scanner but put down in the middle of the glass; this made it harder to get the volume exactly straight. Each page had to be carefully aligned by inspecting its reduced scanned image on-screen against a rectangular screen grid and adjusting the position before final scanning. It took between 2 and 3 minutes for each scan, including all related record-keeping. The larger ledger books (the newer ones) were scanned one page per scan (1,100 pages on 1,100 scans); the smaller ledger books (the earlier ones) could be scanned with two facing pages at a time (1,200 pages on 600 scans). So the total was 2,300 pages on 1,700 scans.

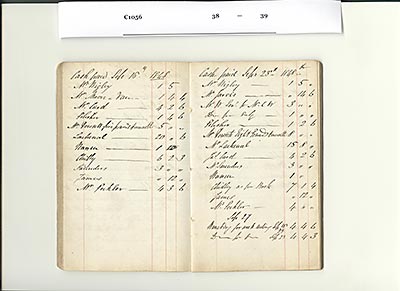

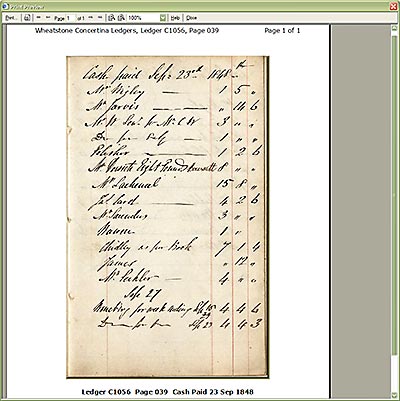

Scan of Ledgers

(Click for larger image.)

Where part of a book had to project over the edge of the scanner, it was supported on improvised platforms suggested by the Conservation department, made of plastic foam blocks (reused packaging material) covered with soft white tissue paper and built up to the exact level of the scanner glass, so that no stress was put on the binding of the books. So far as we know, none of the ledgers suffered any damage from being scanned. The scanner was periodically dusted with a whisk to remove dust from the glass. There was no need for an expensive scanner specialized for books or for a vacuuming system; the flatbed scanner and the whisk were entirely adequate. A book with normal binding and a sufficiently-wide gutter need not be pushed down flat against the glass; the depth of field is good enough that the scanner can look up into the gutter just fine, with a bit of distortion from foreshortening which is normally not noticed (if the foreshortening is objectionable, it can be corrected later in the scanned image). Some of our ledgers were bound like that, and others were saddle-stitched so they opened flat. Because of the careful handling needed, no existing kind of automation could have speeded up the scanning. It was important to identify the scans, by ledger and page number. The pages are not self-identifying for the most part, so shown a random scan there would be no way to be sure which page it records. To prevent mistakes, I pre-printed small strips of paper with the identifying codes for the ledgers and page numbers, one strip of paper for each scan to be made. At the time of scanning, the page was verified and the matching strip of paper was laid on the scanner glass beside the book; after that scan the strip of paper was filed away as completed. Hence, each scan shows a page image plus a readable identification of that page which cannot become separated. This makes each scan self-identifying for later processing, and for possible re-processing years later. Experiments and evaluations by other projects support the idea that there is really one best choice for scanning manuscripts or typescripts: full color (24 bits per pixel) at 300 dpi [“dpi” = “dots per inch”] or better. The color greatly aids human interpretation of the scans, but compresses well so that it adds only modestly to storage requirements. I kept the volume of information practical by having the scanner do light JPG compression of about 20-to-1 when saving each scan (and observed some care later to make this decision harmless). So each full-color 300dpi scan, which would be about 20MB uncompressed, was stored as about 1MB compressed. Using 1MB per page for the original data is practical; 1,700 scans total about 1.7GB. This makes it easy to back up the precious original scans to removable storage (three CDs, or a single DVD, or a PC-Card disk or a USB flash drive) to keep them safe. Scanning took me less than two weeks of full-time work (1,700 scans at 2.5 minutes each is a total of 71 hours total scanning time). The scanning could be done within the Horniman Museum, by carrying in the laptop computer and attaching it to the scanner on site, so there was no need to send manuscripts to other locations and no Museum staff were required to design security and to accompany them. After scanning within the Museum, copies of the scanned images could be easily removed and further work done elsewhere, so there was also no long-term requirement for working space within the Museum. The original scans as saved from the scanner, each containing an image with the identification of ledger and page, were immediately preserved on-site without any further processing. They were accessioned as permanent records of the Museum and put into the institutional database archive, so that they can always be found later and subjected to alternate processing for unforeseen future purposes. All work for this project was done with copies of the original scans. 5. Image ProcessingThe scanned images benefit from further processing to enhance their readability, and in fact after enhancement they can be considerably easier to read than the original manuscript documents. This step is not one of applying artistic judgment, but rather using common transformations already built into the image-processing software. We planned to present two sizes of images to researchers: a smaller size which would fit a whole document page on most computer screens, and a larger size which would be at least as clear as the original though it would require scrolling to see all parts of the page on screen. For documents the size of our later notebooks (pages about 8 inches x 10 inches, 20 cm x 25 cm), an image at 60dpi would just about fit on a computer screen (in a browser with ordinary toolbars and scrollbars). For closer examination, a 150dpi image would be two-and-a-half times as large, much bigger than the screen but (by our tests, and in full color) adequate to examine any fine detail on the page. For our older notebooks, with smaller pages about 4 1/2 inches x 7 inches (11 cm x 18 cm), we decided to increase these numbers. An image at 96dpi would fit on most screens and be close to natural size, while showing a whole page at once. For closer examination, a 192dpi image would be twice as large and would provide even finer detail than for the larger pages. But for any screen on which multiple ledgers were shown, such as the indexes which contain miniature pictures of all the books, a consistent scale was used for all images. I first of all opened each 300dpi JPG-format scan and de-skewed it if necessary—about a quarter of the scans required rotation, usually by only half of a degree, one direction or the other, very occasionally by a full degree. I then reduced the scan to 150dpi/192dpi (the largest size for distribution), cropped the scan to a fixed size at the page edges (a different natural size for each ledger), and saved the result in a format called “PNG” (about 5MB each for each cropped page—much larger than the compressed scans at higher resolution). For only a handful of scans was it necessary to edit an image in an particular way to make its presentation clearer. For instance, one page had an inch or so of its corner missing (broken off by flexing) and on the scans the broken edge was nearly invisible so that the material from the next page showing in the missing area could possibly have been misleading. It was possible to very slightly enhance the broken edge where the corner was missing so that the true situation became visually obvious on the masters, as it would be in a library. The resulting full-size PNG masters were then the basis for all further processing. Images in the JPG format suffer some slight degradation every time they are opened and re-saved, whereas images in the PNG format can be opened and re-saved repeatedly. By making the conversion at this point, an image has been saved in JPG format only once so far (at the time of original scanning).

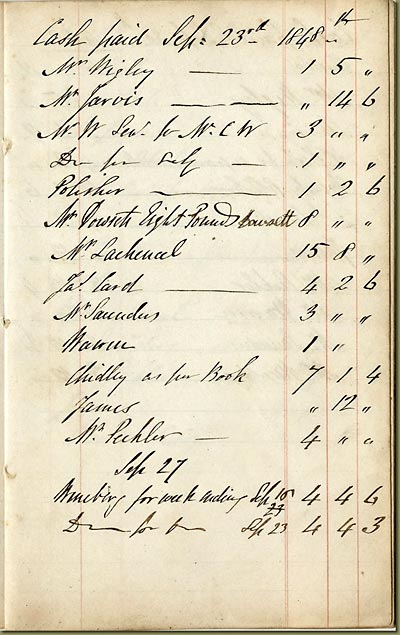

Large Display Image

(Click for larger image.)

From the 150dpi/192dpi PNG masters, I then made the images which would actually be viewed. All in one step, I opened the 150dpi/192dpi images, reduced their color saturation slightly, did "contrast-stretching" to make the paper a bit lighter and pencil a bit darker, adjusted levels for midtones, applied minor sharpening, then added a drop-shadow to set the page off from the background, and saved the result as a 150dpi/192dpi image in compressed JPG format, again at only about a 20 to 1 compression ratio. Hence, a final image has been subject to moderate JPG compression exactly twice in its whole life; this assures that any artifacts of JPG compression are too minor to notice. Then the same process was repeated to make the smaller images: open a 150dpi/192dpi PNG, resample to 60dpi/96dpi, reduce color saturation, contrast-stretch, adjust levels, sharpen slightly more aggressively for the smaller images, add the drop-shadow, and save as a 60dpi/96dpi JPG. The large and small pages must be processed to look exactly the same except for size. It is sometimes claimed that this kind of editing cannot be practically carried out on a low-end personal computer because it's too slow, but that's clearly not true. The misunderstanding may come about when graphic artists imagine interactively tuning an image and judging the effect of each action. But our case isn't like that. The work to de-skew and crop individual images to make a PNG master does have to be done separately for each image, and it took an average of between 2 and 3 minutes per image—as much time as the original scanning. But then all the remaining standard operations to turn a PNG master into a JPG for display are not done interactively. Once the parameter values for a ledger have been chosen, all the pages for a whole ledger can be processed automatically. If it takes fifteen seconds to process an image, you just start up the batch job on a directory containing 80 images and come back 20 minutes later to find them all completed. These steps took about an hour of wallclock time for each ledger.

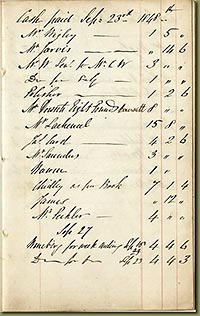

Small Display Image

(Click for larger image.)

At this point there are four versions of each image: the 300dpi JPG from the scanning (about 1MB each), the 150dpi or 192dpi cropped master in PNG (about 5MB each), the 150dpi or 192 JPG for display (about 300KB–500KB each), and the 60dpi or 96dpi JPG for display (about 75KB–125KB each). Only the last two will be included on the website or the CD. All the work described here was done in FireWorks 4. This program is now very dated, having been superceded by a later version in early 2002, but it was used for the duration of the entire project to assure consistency of results. The image files totalled about 1,700 scans, 2,300 PNG masters, 2,300 large JPG files, and 2,300 small JPG files—over 8,500 image files in all. All the files were named in accordance with systematic standards for the project, so that the name of every file indicated (a) the ledger, (b) the page number(s), (c) the size (scan, large, small, or thumbnail), and (d) the format (PNG, JPG). These file naming conventions made it easy to relate various versions of the same page. Considerable storage is required: by the end of the project, all the files (images and otherwise) required more than 15 gigabytes (15,000 megabytes) of disk storage, more than twenty times the size of the finished product. 6. WebpagesImages of the ledger pages are the main point, but not enough. When each image is to be displayed, we need also to show the identification of the ledger and page that the image represents and to display the navigation controls to go to the next image, go back to the previous image, go up to the index of images for the volume, and so forth. This means that every image needs a corresponding webpage (written in the language called “HTML”) to display it.

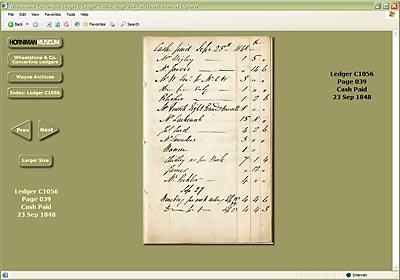

Webpage with Small Display Image

(Click for larger image.)

Every project has some particular requirements. For this project, we needed to have an alternate presentation of every page suitable for printing (plain white background without navigation controls, full A4 or US Letter image of a page, identification of the image in black type). We wanted to use keyboard navigation onscreen (e.g., cursor-right key to go to the next image). We used a very brief Cascading Style Sheet (CSS) document to alter the display for printing, and used a small amount of JScript coding to augment the navigation (though the site is fully usable without JScript, and does not require cookies).

Print Preview from Webpage

(Click for larger image.)

Before creating the HTML, CSS, and JScript coding for the web pages, we had to decide which operating systems and web browsers we needed to support. There is an overwhelming majority of Windows machines; for this reason, Macintosh and Unix machines must adopt a basic compatibility strategy in order not to be excluded from many websites, so the general strategy is to be broadly compatible with a wide class of Windows environments, including older ones, and Macintosh and Unix will then likely fall into place with only a few details to watch. We decided that we would be compatible with all versions of Windows from Windows98 forward (Windows98, Windows98SE, WindowsME, WindowsNT, Windows2000, WindowsXP) which includes almost every machine in use (and should extend into the future). Most web browser upgrades are free and hence recent versions of these programs are widely used. We had decided in late 2002 that we would test full compatability with Internet Explorer 6 (47%), Internet Explorer 5 (44%), and Netscape 7/Mozilla (1%)—for a total of 92% of then-current browser usage; and work with some less-essential features missing on Netscape 4 (2%), Internet Explorer 4 (1%), and Opera (1%)—a total of 96%. For Macintosh, we would verify that Internet Explorer 5.1 worked. Our motive then for including these last few browsers, especially Netscape 4, was the suspicion that some people who seldom browsed the internet might try to look at our CD offline, and we wanted them to see something without having to update their browsers. During the remaining course of the project the percentages of browser use changed a little bit, but the general picture remained much the same. By early 2005, Internet Explorer (5 and 6) had maintained just about level share at over 90% usage. Older browsers from the Netscape 4 period had largely fallen out of use, so we stopped testing with them. We also dropped later versions of Netscape and replaced them by Mozilla Firefox, which had recently been released and had taken over the Netscape/Mozilla portion of the market. As a practical matter, Internet Explorer and Firefox (both free) now define browser compatability. Looking forward, the compatability strategy is to use on our webpages only language features (in HTML, CSS, and JScript) which are both very common and handled correctly now, and hence are likely to be correctly rendered by any future browsers which could supplant the current market leaders. Anyone can examine the webpages to see the exact decisions made. (The Macintosh amounted to about 2% of personal computer sales, but a new operating system had been shipped, so we verified that both Internet Explorer for Mac 5.2 and Safari 1.2.4 worked correctly.) The textbook recipe to organize a website such as ours would be with a database: you put all the ledger images and all their related data into a database, and then when any image is requested a program pulls that data out of the database, consults a template, dynamically creates an HTML webpage, and sends the webpage down the wire with the image following; the user’s browser interprets the HTML for display, finds links in the HTML page to the required CSS and JScript, and inserts the image on the screen where it belongs. A database-backed website is very general and flexible, and in fact is the approach used here, but in a very special way: what we do is to limit the queries we will answer, go through all of them querying the database, and then cache all of the answers. With a 100% cache, there is no need to deliver the database or the query tool to users; it is enough to just deliver the cached results along with a user interface to restrict queries to those for which we have pre-calculated answers. This means that we use the database only during the construction of our website, and build the entire site using static HTML webpages. All the HTML webpages that can be displayed are pre-computed and filed away; none is built dynamically in response to requests; only an existing page can be chosen. This extremely-simple approach is the fastest one, and it imposes less overhead of storage space than might be expected; very roughly, each ledger-page has a large image of around 400KB, a small image of another 100KB, and two HTML display webpages totaling about 10K; so all the data for each source-page takes about 510KB, of which the the compressed images take 98% of the storage space anyway, and adding all the static HTML webpages only adds 2% of the total storage requirements for the site, less than about any database mechanism. Our requirement for a CD version which can work without any internet connection also makes a conventional dynamic database-backed website impractical. The more powerful our database processing, the less plausible that we could install the programs we needed and run them on a wide range of low-end PCs, on both Windows and Macintosh machines. And we wanted anyone with a CD to be able to copy the working site to any personal computer or server (Windows, Mac, Unix), which presents the same problem. By using the database to build the site initially and then dispensing with it for retrieval of pre-computed queries, we have a simple approach which will work on any computer with its own compatible web browser. Having decided to use static HTML webpages, our next need was to write about 4,600 webpages (one for every large and one for every small image that could be requested), all with almost identical text; clearly these would need to be written by some program. In addition to these, we needed a couple of dozen other webpages for directories and indexes and explanations, which could be written by hand. To create the 4,600 webpages, I used two tools from the Microsoft Office suite that I was already familiar with—Excel and Word. For each ledger (typically 100–200 pages) I created two Excel spreadsheets, one for its large images and one for its small images. Each Excel spreadsheet has one row for every page in the ledger, containing the filename for its image, the filenames for its "next" and "previous" images, the filename for its alternate-size image, the image size to be used for printing, the page number and name, and so forth—all the information that would vary from webpage to webpage. Most of this information can be calculated by formulas in the Excel spreadsheet, so it was not necessary to type in all the information manually. I then typed a template for an HTML webpage into Word, inserting “fields” where the information would vary and was to be inserted from the Excel spreadsheet. Finally, I used Word's “Mail Merge Wizard” to insert the filenames and parameters from a row of the spreadsheet into each successively-generated webpage, just like inserting names and account balances into form letters. A final step was needed to run the generated “form letters” through a ten-line macro in the Word program (written in VBA, and copied off the Microsoft website with a small addition) to strip out extra word-processing information and leave just the bare text of the HTML web pages, broken up one per file, ready to copy to the website.

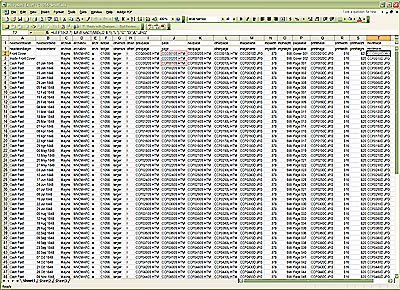

Excel Spreadsheet for a Ledger

(Click for larger image.)

In this way I could get all the varying information correct once in the spreadsheets, then write a single webpage template (and test the HTML for that template on different browsers and operating systems), and finally automatically generate 4,600 customized variants of the template. It took several tries to get all the variable content right, and it would have been impossible to produce all the webpages by hand. I also used Word's "directory" feature in Mail Merge to generate the indexes for each volume. This left only a handful of webpages to type in manually.

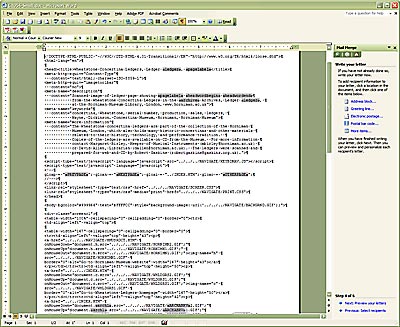

Word Template for MailMerge to Webpages

(Click for larger image.)

I haven't seen Word and Excel used in this way before, but the model of “Mail Merge” is obviously applicable; a webpage on our site, like any form letter, contains mostly standard content in common with all the other pages, plus a few spots where the individual information for each particular webpage needs to be inserted. The idea is very straightforward. Anyone who knows about Mail Merge can understand exactly what is needed, can verify the results, and can fix any mistakes. Even if someone knows a programming language which could be used to do the same job, using Word and Excel is apt to be at least as quick and as easy, and there is no compromise in the result. In the terms of the discussion above, I used Excel as the database and I used Word's templates and MailMerge as the tool to generate and cache all the query responses (webpages). But these programs are employed only during generation of the site. A user of the website (on the internet or on CD) does not need to have Excel and Word, or even know that they exist. Indeed, a user of the website does not need anything but a generic web browser, and there is nothing at all to download—no viewers or applets or plug-ins or anything else is required. Just go to the internet address or start up the CD, and it works. All the files for the website as a whole—images, webpages, and everything else—were assembled in Microsoft's FrontPage. I did not use FrontPage to create any webpage content. But this program knows about HTML, so it can check all the thousands of webpages to be sure that all the links created by program actually point to real filenames, and it can also catch many other problems. It can accurately copy all the files to web servers, either local disks or remote servers over the internet. For our project, FrontPage checks a website with 8,974 files, including 4,320 image files. The pages contain a total of 106,136 hyperlinks. These numbers underline why such a site can hardly be constructed correctly by hand or checked by eye alone. 7. CDsI determined that the best way to distribute the images both on the web and on CD was to make a website which was not tied to a specific internet location. This is an ordinary website, but constructed so that whenever there is a reference to another part of the same website, that reference is expressed not by a path starting from a fixed internet address for the site, but rather by a path relative to the location of the reference. (These are called “relative URLs”, as opposed to “absolute URLs”.) A website carefully constructed so that all its internal references are relative can be copied around freely. In particular, it can be distributed on CD, and viewed with an ordinary web browser—just use the browser to open the website directly from CD. The files from the CD can be trivially copied by anyone onto a hard disk, where they continue to work as a website. If that hard disk is set up with a web server, the same files are now available over the web from that new location with no further work at all. There are many advantages to using the HTML website format on a CD. By using the browser as the viewer, we avoid the need to create a special (and unfamiliar) interface for the CD, and to write a special viewer program. The CD works just like any website in the familiar way, and all the power of the browser is available—to look at multiple pages in different windows simultaneously, to print to any printer with accurate preview, and so forth. Also, anyone who wonders whether to buy the CD can preview the same files by just looking at the website for free, using the same computer and browser; if the website looks good, the CD will look the same (because it is the same). There is one more minor restriction: to satisfy some CD-ROM standards, all filenames must be in old-fashioned "8.3" format, and all uppercase (e.g., “SAMPLE01.TXT”). This is irritating, but easy to do since almost all the files are generated by programs. Making CDs turned out to be extremely simple. Once a website is constructed in the way described so it can be written on any disk, there is no challenge whatever in writing it onto a CD. I added three files: a text README.TXT file to describe the CD, an AUTORUN.INF file with a couple of lines to start up when a CD is inserted into a drive, and a trivial generic program to perform the task of starting up the user's selected browser and pointing it at the root of the web on CD (various websites offer pre-written versions of this program for free use). Preparing all these took about an hour. To test the CD, I just "burned" the website and the three extra files onto a CD-RW disk on a drive in the laptop computer. These writable CD-ROMs cost less than fifty cents apiece, and we made about a dozen of them for testing as the project progressed. For production of CDs for sale, we are using an internet service company near San Francisco. There are several competitors who offer similar services, and we chose one with a good track record for worldwide shipping. The advantage of these production companies is that they make it easy to distribute CDs with no investment in inventory, no credit-card processing, and no fulfillment effort.

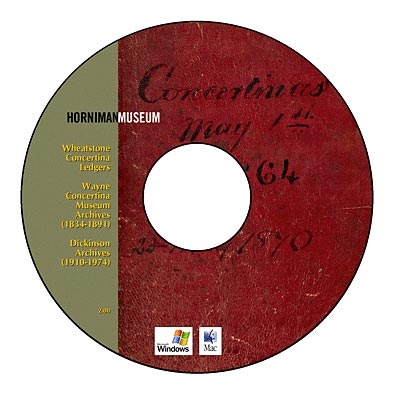

Image for Painting on CDs

(Click for larger image.)

The way it works is this: you upload to the service up to 700MB of data for your CD, or you just send a sample CD to the company. You also create and upload a "label" for the CD—a graphics file for a 1575-pixel doughnut the size of a CD. The production company holds a copy of the data files and the file for your "label" on their servers. There is no up-front cost. Whenever you want a CD, you log on to your account and order one. The company responds by recording your data on a CD and (with a special computer-controlled device) “spray-painting” your full-color graphic onto the top surface of the CD (no stick-on labels!). They will create one CD or thousands of CDs and send them to you, within a couple of days. For orders from other people, the same companies not only do the production, but also fulfillment and customer service. You set up a free "storefront" on their website, where anyone can order a copy of your CD. They receive the orders, process the credit cards, create the CDs on demand, ship them to the requesters, and handle any questions about product or shipping. You set the price, and orders are charged at that price plus shipping. Assuming your chosen price is higher than the manufacturing price (which is about $5.00 per CD including fulfillment and customer service), the extra sum collected is your profit and is remitted to you periodically. You receive notifications of orders, and you can consult full records for your online store at any time. Using this kind of production company made our final steps trivial. We just made a label graphic and uploaded it with our completed files to the company over the internet, and edited our online store description in HTML. Now anyone in the world can place an internet order for a CD containing our website. There is no need for us to employ anyone to do the fulfillment and shipping work, and there is no investment in inventory. When we create an updated or corrected CD, we merely upload it to the production company to replace the previous master and it becomes the source for orders produced from that point forward. 8. Initial ExperienceThe first half of the work was completed for release on 15 April 2003, so that by the final release we have had two full years of experience with how that part of the whole project has been used. During this initial period only the five twentieth-century production ledgers were available on the web; we anticipate still greater interest now that the other twelve nineteenth-century ledgers have been added, more than doubling the records and adding what may be the more interesting volumes (the sales ledgers with names of the early purchasers of concertinas in the mid-nineteenth century, and the cash payment ledgers). To introduce the partly-completed website, we sent CDs to twenty-five individual researchers, libraries, and museums around the world where we thought there were people interested to study the documents we had scanned. We asked three websites specializing in the topic of concertinas to include links to our new website. Here are some figures for the first week of the new website, for the first year, and for the first two years. The units of measure are “unique visitors”, meaning a unique connection to the internet (we can tell when the same connection connects again and usually what country it comes from, but we have no personal data about the visitors), and “sessions”, where a session is a visit to the site by a unique visitor who may look at any number of pages during the session. Total sessions include repeat visits from visitors who come back more than once. (You sometimes see website traffic reported in terms of “hits”, where a hit is a request from a browser for an individual file—a webpage, an image, a stylesheet, a navigation button, etc. A session equals at least a dozen hits, and usually many more, up to many thousands of hits per session.) There were 1,455 sessions in the first week, averaging 200 per day. Of these, 309 were sessions from 309 visitors who came by only once. But 1,146 sessions were from visitors who visited multiple times; and 59 different and unique people visited six or more times each in the single week (in fact, these 59 people visited an average of 17 times each). For the whole first year, there were 32,917 sessions, averaging 90 per day. Of these, 4,068 were sessions from 4,068 visitors who came by only once. But 28,849 sessions were from visitors who visited multiple times; and 653 different and unique people visited six or more times each (in fact, these 653 people visited an average of 43 times each). Extending to the first two years, there were 90,612 sessions, averaging 124 per day. Of these, 8,343 were sessions from 8,343 visitors who came by only once. But 82,269 sessions were from visitors who visited multiple times; and 1,588 different and unique people visited six or more times each (in fact, these 1,588 people visited an average of 49 times each). It’s interesting to note that over the two years, the average visit consisted of looking at only two or three pages, for a total time of four minutes or so (though of course some sessions were marathons); so with high-speed internet access, people really can use the manuscripts as though they were on local shelves, quickly checking a particular point without needing to pre-plan extensive research, and coming back frequently as more questions arise. The user interface evidently supports this need to identify a targeted fact quickly and go directly to it, without confusion. Independent measurements recorded that the site was fully functioning and available 99.9938% of the time (that is, it was unavailable about 30 minutes per year for maintenance). The total size of the data sent from the website to visitors during the first two years was 14,960,000,000 bytes, or just under fifteen gigabytes (14.96 GB). | | 15 Apr 2003

-21 Apr 2003

(1st week) | 15 Apr 2003

-14 Apr 2004

(1st year) | 15 Apr 2003

-14 Apr 2005

(2 years) |

|---|

| Total unique visitors: | 407 | 5,420 | 11,596 | | Total sessions (visits): | 1,455 | 32,917 | 90,612 | | Total page views: | 6,529 | 81,248 | 230,845 | | Total days covered: | 7 | 366 | 731 | | Average sessions per day: | 207 | 90 | 124 | | Repeat visitors: | 98 | 1,352 | 3,253 | | Sessions, repeat visitors: | 1,146 | 28,849 | 82,269 | | One-time visitors: | 309 | 4,068 | 8,343 | | Two-time visitors: | 24 | 400 | 935 | | Three-time visitors: | 10 | 147 | 391 | | Four-time visitors: | 4 | 85 | 207 | | Five-time visitors: | 1 | 67 | 132 | | Six+-time visitors: | 59 | 653 | 1,588 | | Average session duration: | 08:33 | 04:27 | 03:50 | | Sum, all session durations: | 8.6 days | 102 days | 242 days |

During the first two years, visitors came from 88 identifiable countries (in order of pages viewed): United States

United Kingdom

Canada

Netherlands

Sweden

France

Australia

Japan

Germany

South Africa

Ireland

China

Taiwan, Prov. of China

Italy

Denmark

Belgium

Spain

Korea, Republic of

Switzerland

Austria

Brazil

Norway

New Zealand

Portugal

Romania

Czech Republic

Poland

Hong Kong

Mexico

Argentina

Israel

Finland

Greece

India

Russian Federation

Turkey

Hungary

Nigeria

Singapore

Philippines

Peru

United Arab Emirates

Luxembourg

Venezuela

Vietnam

Lithuania

Iran, Islamic Rep. of

Estonia

Croatia

Malaysia

Jordan

Slovenia

Columbia

Egypt

Latvia

Chile

Thailand

Cyprus

Yugoslavia

Costa Rica

Tanzania, United Rep.

Iceland

Ecuador

Senegal

Mali

Indonesia

Puerto Rico

Tunisia

Ukraine

Kuwait

Slovakia

Mauritius

Sudan

Panama

Namibia

Bolivia

Virgin Islands, British

Guyana

Saudi Arabia

Dominican Republic

Sri Lanka

Algeria

Pakistan

Guatemala

Macedonia, Former Yugoslav Rep. of

Jamaica

Bulgaria

Uganda

Before we put the first part of the ledgers on the web, we thought we knew most of the people who were seriously interested in them, and those were the twenty-five people to whom we sent CDs. Since then we have learned that there are 1,588 people who are interested enough to consult our ledgers an average of 49 times apiece in two years. So it would have been a very wrong decision not to build the free public website on the grounds that the material was so specialized that it was of interest only to a handful of people with whom we were already in contact, and we could just send them CDs. The interest is far greater than we knew. We have had not one complaint about the site and not one report of errors or difficulties while using it, while receiving many notes of appreciation, even from people using unusual computers and browser software. This appears to confirm the soundness of the engineering approach chosen. During the first two years, we sold eight copies of the CD of the partial site. This CD was clearly identified as an interim stage, so it may be that purchasers sensibly decided to await the full release at the same price. We can't predict how many CDs of the full site we will sell in a typical future year, but it’s striking to compare eight CDs to the almost 1,600 people who have used the partial website heavily. It is hard to avoid concluding that the free and immediate online access will allow the collection to have a far greater impact on future research than the same information made available for sale even at a modest price. I would like to record my great appreciation to the Horniman Museum and to its staff members for making possible the work described here. The project was incorporated into the program of the Musical Instrument section at the Horniman Museum, and the Keeper, Margaret Birley, contributed substantially to many phases of the work from the initial plan through the final presentation. I also had the benefit of help and advice from Bradley Strauchan, Deputy Keeper of Musical Instruments, Louise Bacon, Head of Conservation, and David Allen, Librarian at the Horniman Museum Library, plus assistance from many other members of the Horniman‘s staff. The Director, Janet Vitmayer, took an informed interest in my progress throughout. I am not connected with the Horniman Museum in any capacity. All the opinions in this document are my own and do not represent the opinons of the Horniman Museum. Appendix: Sample Files To download any of these files, right-click the “LINK” and choose “Save Target As…” from the drop-down menu.) - LINK

Copy of original scan, Ledger C1056 pages 38–39, 300dpi

(Graphics .JPG file, size 947K bytes) - LINK

Large display image, Ledger C1056 page 39, image-processed, 192dpi

(Graphics .JPG file, size 244K bytes) - LINK

Small display image, Ledger C1056 page 39, image-processed, 96dpi

(Graphics .JPG file, size 71K bytes) - LINK

Excel file of merge data for Ledger C1056

(Excel .XLS file, size 86K bytes) - LINK

Word file of merge template for Ledger C1056

(Word .DOC file, size 53K bytes) - LINK

VBA Word Macro to strip extraneous data and write single webpages

(Text .TXT file, size 2K bytes) - LINK

Webpage text for Ledger C1056 page 39 with small image,

generated by mailmerge from Excel into Word template plus macro

(Text .TXT file embedded in .HTM file, size 7K bytes) - www.horniman.info/WNCMARC/C1056/PAGES/CCP0390S.HTM Webpage for Ledger C1056 page 39 with small image, on the website

(Link to completed website)

AuthorRobert Gaskins (robertgaskins@gaskins.org.uk) has studied and taught the use of computers for research in the humanities and linguistics, and is co-author of a textbook on computer programming for students of languages, literature, art, and music. He has also managed the computer science research section of an international telecommunications R&D laboratory, invented a graphics application program, raised venture capital funding and led a startup enterprise to develop and market it, and headed the Silicon Valley business unit of a personal computer software company. He recently created the Concertina Library (www.concertina.com). He divides his time between San Francisco and London, where he is learning to play the Maccann duet concertina. Copyright © 2005 Robert Gaskins

Reprinted from http://www.horniman.info |